Automated Batch Testing

This chapter discusses all aspects of automating testing, also known as batch testing. The coverage includes automatically executing tests, distributing tests to different machines, and processing the results produced by the test runs. (See also, How to Do Automated Batch Testing.)

Automated Test Runs

Squish provides command line tools that make it possible to completely automate the running of tests. The tool for executing tests is squishrunner, but for it to work properly a squishserver must also be running—the squishrunner makes use of the squishserver to start AUTs and communicate with them.

Automated batch tests can be created on any of the platforms that Squish supports, including Windows and Unix-like platforms.

For example, here is a simple Unix shell script to execute the complete test suite ~/suite_myapp and save the results to ~/squish-results-`date`:

#!/bin/bash # add Squish to PATH export SQUISH_PREFIX=/path/to/squish export PATH=$SQUISH_PREFIX/bin:$PATH # Dated directory to store report results: LOGDIRECTORY=~/squish-results-`date -I` # Execute the test suite with a local squishserver: squishrunner --testsuite ~/suite_myapp --local --reportgen xml3.5,$LOGDIRECTORY

Since we are using --local, it is not necessary to start a separate squishserver process in the background from our script.

We are using --reportgen to specify the kind of output to generate. For a list of all report-generator formats, see squishrunner –reportgen: Generating Reports.

Here is a similar example, written for Windows using the standard cmd.exe shell. Because the Windows variable, %DATE% sometimes uses a period and other times a dash or some other character to separate the numbers, it can't be used in some locales to form a directory name.

REM add Squish to PATH set SQUISH_PREFIX=C:\path\to\squish set PATH=%SQUISH_PREFIX%\bin;%PATH% REM The Log Directory does not (but perhaps should) have today's date in it: set LOGDIRECTORY=%USERPROFILE%\squish-results REM Execute the test suite with a --local squishserver: squishrunner --testsuite %USERPROFILE%\suite_myapp --local --reportgen "xml3.5,%LOGDIRECTORY%"

One disadvantage of using shell scripts and batch files like this is that for cross-platform testing we must maintain separate scripts for each platform. We can avoid this problem by using a cross-platform scripting language which would allow us to write one script and run it on all platforms we were interested in. Here is an example of such a script written in Python, which you can find in SQUISHDIR/examples/regressiontesting/squishruntests-simple.py.

#!/usr/bin/env python

# -*- encoding=utf8 -*-

# Copyright (C) 2018 - 2021 froglogic GmbH.

# Copyright (C) 2022 The Qt Company Ltd.

# All rights reserved.

#

# This file is part of an example program for Squish---it may be used,

# distributed, and modified, without limitation.

#

# For a script with more options and configurability, see 'squish6runtests.py'

# This script assumes squishrunner and squishserver are both in your PATH

import os

import sys

import subprocess

import time

def is_windows():

platform = sys.platform.lower()

return platform.startswith("win") or platform.startswith("microsoft")

# Create a dated directory for the test results:

LOGDIRECTORY = os.path.normpath(

os.path.expanduser("~/results-%s" % time.strftime("%Y-%m-%d"))

)

if not os.path.exists(LOGDIRECTORY):

os.makedirs(LOGDIRECTORY)

# execute the test suite (and wait for it to finish):

exitcode = subprocess.call(

[

"squishrunner",

"--testsuite",

os.path.normpath(os.path.expanduser("~/suite_myapp")),

"--local",

"--reportgen",

"xml3.5,%s" % LOGDIRECTORY,

],

shell=is_windows(),

)

if exitcode != 0:

print("ERROR: abnormal squishrunner exit. code: %d" % exitcode)This script does the same job as the Unix shell script and Windows batch file shown earlier, and makes the assumption that squishrunner and squishserver are in the PATH. It should run on Windows, macOS and other Unix-like systems without needing any changes. It also checks the return code of squishrunner for abnormal termination.

squishrunner will run the specified test with all the required initializations and cleanups. The resulting report can then be post-processed as necessary—see Processing Test Results for details.

Once we have the script running on all desired platforms, we must ensure that it can be run automatically, say once a day. How to do this is beyond the scope of this manual, but if you require help you can always contact Qt Support Center to assist you. Since Windows Services don't support a display for running GUI applications, it is not possible to execute the squishserver as a Windows Service.

Distributed Tests

Throughout the manual it is generally assumed that all testing takes place locally. This means that the squishserver, squishrunner, and the AUT, are all running on the same machine. This scenario is not the only one that is possible, and in this section we will see how to remotely run tests on a different machine. For example, let's assume that we work and test on computer A, and that we want to test an AUT located on computer B.

The first step is to install Squish and the AUT on the target computer (computer B). Note though, that we do not need to do this step for Squish for Web. Now—except if we are using Squish for Web on computer B—we must tell the squishserver the name of the AUT's executable and where the executable is located. This is achieved by running the following command:

squishserver --config addAUT <name_of_aut> <path_to_aut>

Later we will connect from computer A to the squishserver on computer B. By default the squishserver only accepts connections from the local machine, since accepting arbitrary connections from elsewhere might compromise security. So if we want to connect to the squishserver from another machine we must first register the machine which will try to establish a connection for executing the tests (computer A in this example), with the machine running the AUT and squishserver (computer B). Doing this ensures that only trusted machines can communicate with each other using the squishserver.

To perform the registration, on the AUT's machine (computer B) we create a plain text file in ASCII encoding called /etc/squishserverrc (on Unix or Mac) or c:\squishserverrc (on Windows). If you don't have write permissions to /etc or c:\, you can also put this file into SQUISH_ROOT/etc/squishserverrc on either platform. (And on Windows the file can be called squishserverrc or squishserverrc.txt at your option.) The file should have the following contents:

ALLOWED_HOSTS = <ip_addr_of_computer_A>

<ip_addr_of_computer_A> must be the IP address of computer A. (An actual IP address is required; using a hostname won't work.) For example, on our network the line is:

ALLOWED_HOSTS = 192.168.0.3

This will almost certainly be different on your network.

If you want to specify the IP addresses of several machines which should be allowed to connect to the squishserver, you can put as many IP addresses on the ALLOWED_HOSTS line as you like, separated by spaces. And if you want to allow a whole group of machines which have similar IP addresses, you can use wildcards. For example, to allow all those machines which have IP addresses that start with 192.168.0, to connect to this squishserver, you can specify an IP address of 192.168.0.*.

Once we have registered computer A, we can run the squishserver on computer B, ready to listen to connections, which can now come from computer B itself or from any of the allowed hosts, for example, from computer A.

We are now ready to create test cases on computer A and have them executed on computer B. First, we must start squishserver on computer B (calling it with the default options starts it on port 4322; see squishserver for a list of available options):

squishserver

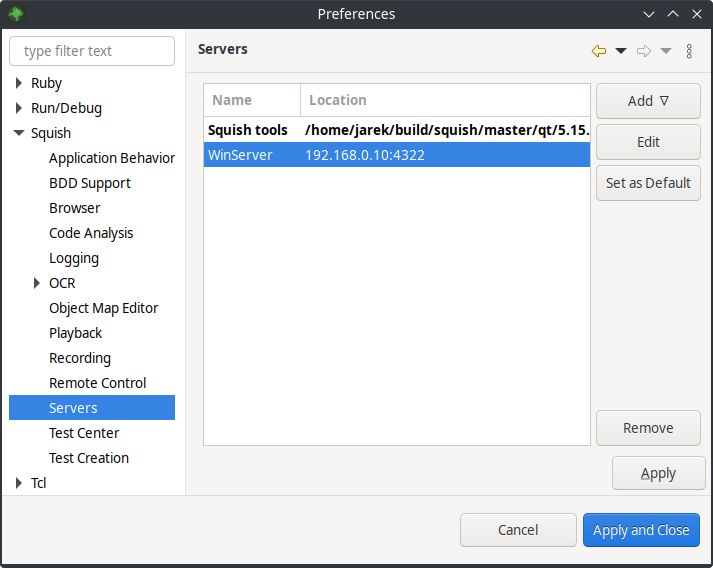

By default, the squishide starts squishserver locally on startup and connects to this local squishserver to execute the tests. To connect to a squishserver on a remote machine, select Edit > Preferences > Squish > Servers > Add > Remote server and enter the IP address of the machine running the remote squishserver in the Hostname/IP field (computer B). Change the port number only if the squishserver is started with a non-standard port number. In that case, set it to match the port number used on the remote machine.

The Servers preferences pane

Now we can execute the test suite as usual. One immediately noticeable difference is that the AUT is not started locally, but on the remote server. After the test has finished, the results become visible in the squishide on computer A as usual.

It is also possible to do remote testing using the command line. The command is the same as described earlier, only this time we must also specify a host name using the --host option:

squishrunner --host computerB.froglogic.com --testsuite suite_addressbook

The host can be specified as an IP address or as a name.

This makes it possible to create, edit, and run tests on a remote machine via the squishide. And by adding the --host option to the shell script, batch file, or other script file used to automatically run tests, it is possible to automate the testing of applications located on different machines and platforms as we saw earlier—Automated Test Runs.

Note: When Squish tools are executed, they always check their license key. This shouldn't matter when using a single machine, but might cause problems when using multiple machines. If the default license key directory is not convenient for using with automated tests, it can be changed by setting the SQUISH_LICENSEKEY_DIR environment variable to a directory of your choice. This can be done in a shell script or batch file. See Environment Variables.

Processing Test Results

In the previous section we saw how to execute an AUT and run its tests on a target machine under the control of a separate machine, and we also saw how to automatically execute test runs using scripts and batch files. In this section we will look at processing the test results from automatic test runs by uploading XML reports to Squish Test Center.

By default, squishrunner prints test results to stdout as plain text. To make squishrunner use the XML report generator, specify --reportgen xml3.5 on the command line. If you want to get the XML output written into a file instead of stdout, specify --reportgen xml3.5,directorypath, e.g.:

squishrunner --host computerB.froglogic.com --testsuite suite_addressbook_py --reportgen xml3.5,/tmp/results

The XML report will be written into the directory /tmp/results.

Next, we can upload the report to Squish Test Center for further analysis, using the testcentercmd command-line tool.

testcentercmd --url=http://localhost:8800 --token=MyToken upload AddressBook /tmp/results --label=MyLabelKey1=MyLabelValue1 --label=OS=Linux --batch=MyBatch

The command above has the following options.

- The URL of a running instance of Squish Test Center

http://localhost:8800. - An upload token,

MyToken. See Squish Test Center documentation for more details. - A command,

upload. - A project name, in this case

AddressBook. - A directory (or a zip file) of XML test results to upload

- 0 or more labels, or key=value pairs for tagging the result in the database

- An optional batch name,

MyBatch, also for the purposes of organizing in the Squish Test Center database.

Project Names, Batches and Labels are concepts used to organize and select test reports in the web interface, and are explained in more detail here.

The xml Report Format

The document starts with the <?xml?> tag which identifies the file as an XML file and specifies the encoding as UTF-8. Next comes the Squish-specific content, starting with the SquishReport tag which in Squish 6.6, has a version attribute set to 3.4. This tag may contain one or more test tags. The test tags themselves may be nested—i.e., there can be tests within tests—but in practice Squish uses top-level test tags for test suites, and nested test tags for test cases within test suites. (If we export the results from the Test Results view there will be no outer test tag for the test suite, but instead a sequence of test tags, one per test case that was executed.)

The test tag has a type attribute used to store the type of the test. Every test tag must contain a prolog tag as its first child with a time attribute set to the time the test execution started in ISO 8601 format, and must contain an epilog tag as its last child with a time attribute set to the time the test execution finished, again in ISO 8601 format. In between the prolog and epilog there must be at least one verification tag, and there may be any number of message tags (including none).

Every verification tag may contains several sub elements.

The uri tag contains the relative path and filename of the test script or verification point that was executed, and the lineNo tab contains the number of the line in the file where the verification was executed. If uri starts with x-testsuite: or x-testcase: or x-results: the path is relative to respectively the test suite, test case or results directories. If file path is outside of the mentioned directories, the uri tag will contain the abosulute file path.

The scriptedVerificationResult tag is used to specify the verification point type. There are other possible types too: screenshotVerificationResult, propertyVerificationResult or tableVerificationResult.

The screenshotVerificationResult is for screenshot verifications or "propertyVerificationResult" for property verifications (e.g. calls to the Boolean test.vp(name) function) or an empty string for any other kind of verification (such as calls to the Boolean test.verify(condition) function), and tableVerificationResult is for table verification points results.

Every verificaton point's result tag has two attributes: a time attribute set to the time the result was generated in ISO 8601 format, and a type attribute whose value is one of PASS, FAIL, XPASS, XFAIL, FATAL, or ERROR. In addition the scriptedVerificationResult tag should contain at least one detail tag whose text describes the result. Normally, two tags text and detail are present, one that describes the result and the other whose text gives a more detailed description of the result. For screenshot verifications there will be additional tags, one objectName whose content is the symbolic name of the relevant GUI object, and one failedImage whose content is either the text "Screenshots are considered identical" (for passes), or the URI of the actual image (for fails, i.e., where the actual image is different from the expected image).

In addition to verification tags, and at the same level (i.e., as children of a test tag), there can be zero or more message tags. These tags have two attributes, a time attribute set to the time the message was generated in ISO 8601 format, and a type attribute whose value is one of LOG, WARNING, or FATAL. The message tag's text contains the message itself.

Here is an example report of a test suite run. This test suite had just one test case, and one of the screenshot verifications failed. We have changed the line-wrapping and indentation for better reproduction in the manual.

<?xml version="1.0" encoding="UTF-8"?> <SquishReport version="3.4" xmlns="http://www.froglogic.com/resources/schemas/xml3"> <test type="testsuite"> <prolog time="2015-06-19T11:22:27+02:00"> <name><![CDATA[suite_test]]></name> <location> <uri><![CDATA[file:///D:/downloads/simple]]></uri> </location> </prolog> <test type="testcase"> <prolog time="2015-06-19T11:22:27+02:00"> <name><![CDATA[tst_case1]]></name> <location> <uri><![CDATA[x-testsuite:/tst_case1]]></uri> </location> </prolog> <verification> <location> <uri><![CDATA[x-testcase:/test.py]]></uri> <lineNo><![CDATA[2]]></lineNo> </location> <scriptedVerificationResult time="2015-06-19T11:22:27+02:00" type="PASS"> <scriptedLocation> <uri><![CDATA[x-testcase:/test.py]]></uri> <lineNo><![CDATA[2]]></lineNo> </scriptedLocation> <text><![CDATA[Verified]]></text> <detail><![CDATA[True expression]]></detail> </scriptedVerificationResult> </verification> <verification> <location> <uri><![CDATA[x-testcase:/test.py]]></uri> <lineNo><![CDATA[3]]></lineNo> </location> <scriptedVerificationResult time="2015-06-19T11:22:27+02:00" type="FAIL"> <scriptedLocation> <uri><![CDATA[x-testcase:/test.py]]></uri> <lineNo><![CDATA[3]]></lineNo> </scriptedLocation> <text><![CDATA[Comparison]]></text> <detail><![CDATA['foo' and 'goo' are not equal]]></detail> </scriptedVerificationResult> </verification> <epilog time="2015-06-19T11:22:27+02:00"/> </test> <epilog time="2015-06-19T11:22:27+02:00"/> </test> </SquishReport>

In examples/regressiontesting you can find some example scripts which execute the addressbook test suite on different machines and present the daily output on a Web page by post processing the XML and generating HTML. The How to Do Automated Batch Testing section explains how to automate test runs and process the test results to produce HTML that can be viewed in any web browser.

© 2024 The Qt Company Ltd.

Documentation contributions included herein are the copyrights of

their respective owners.

The documentation provided herein is licensed under the terms of the GNU Free Documentation License version 1.3 as published by the Free Software Foundation.

Qt and respective logos are trademarks of The Qt Company Ltd. in Finland and/or other countries worldwide. All other trademarks are property

of their respective owners.